So the first thing that you will come across when you start learning apache spark is, dataframes.

Dataframes in apache spark are similar to tables in a RDBMS, just better optimized. Dataframes are table like structures with named columns and they are used in R and python’s pandas library.

Lets see how it works.

I am using the file present here for demonstration.

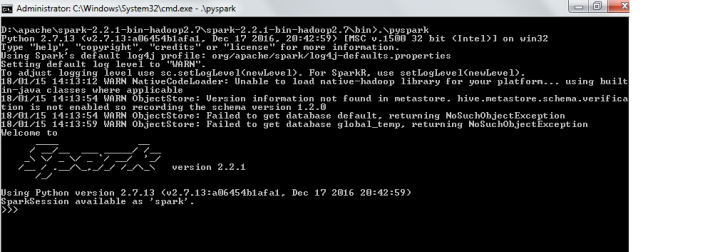

To start apache spark engine using python API’s, go to the bin directory of apache spark installation. and run the command

Something like this should show up in command prompt.

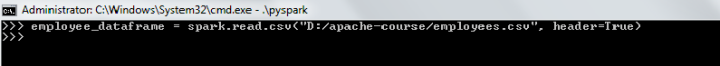

To import the data in the file, use the command.

>> employee_dataframe = spark.read.csv("D:/apache-course/employees.csv", header=True)The parameter, “header=True” tells spark that column names are present in the file.

The command will give no output, and the file will be loaded to spark memory.

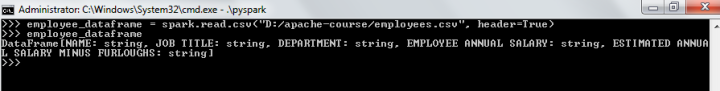

To see the dataframe created. Use command

>> employee_dataframeThe above command will show the structure of dataframe just created.

Other ways to see the structure are:

employee_dataframe.schemaTo get a slightly more readable output use

employee_dataframe.printSchema()To get a random sample set of data, use

sample = employee_dataframe.sample(False,0.1)the first argument, False, tells spark that we want a sampling without replacement. And, the second argument tells spark that we want a sample of approximately 10% of total data.

We also have have a filter function to filter the records based on a given condition.

For a complete set of functions available for dataframes, see this page.

Thank you for reading this article. Many more are on their way so stay tuned.

Advertisements Rate this:Share this: