Previous article structure of JVM – java memory model briefly mentions bytecode executions modes and article JVM internal threads provides additional insight into internal architecture of JVM execution. In this article we focus on Just In Time compilation and on some of its basic optimisation techniques. We also discuss performance impact of one optimisation technique namely

method inlining. In the reminder of this article we focus solely on HotSpot JVM however principles are valid in general.

HotSpot JVM is a mixed-mode VM which means that it starts off interpreting the bytecode, but it can compile code into very highly optimised native machine code for faster execution. This optimised code runs extremely fast and performance can be compared with C/C++ code. JIT compilation happens on method basis during runtime after the method has been run a number of times and considered as a hot method. The compilation into machine code happens on a separate JVM thread and will not interrupt the execution of the program. While the compiler thread is compiling a hot method JVM keeps on using the interpreted version of the method until the compiled version is ready. Thanks to code runtime characteristics HotSpot JVM can make sophisticated decision about how to optimise the code.

Java HotSpot VM is capable of running in two separate modes (C1 and C2) and each mode has a different situation in which it is usually preferred:

- C1 (-client) – used for application where quick startup and solid optimization are needed, typically GUI application are good candidates.

- C2 (-server) – for long running server application

Those two compiler modes use different techniques for JIT compilation so it is possible to get for the same method very different machine code. Modern java application can take advantage of both compilation modes and starting from Java SE 7 feature called tiered compilation is available. Application starts with C2 compilation which enables fast startup and once the application is warmed up compiler C2 takes over. Since Java SE 8 tiered compilation is a default. Server optimisation are more aggressive based on assumptions which may not always hold. These optimizations are always protected with guard condition to check whether the assumption is correct. If an assumption is not valid JVM reverts the optimisation and drops back to interpreted mode. In server mode HotSpot VM runs a method in interpreted mode 10 000 times before compiling it (can be adjusted via -XX:CompileThreshold=5000). Changing this threshold should be considered thoroughly as HotSpot VM works best when it can accumulate enough statistics in order to make intelligent decision what to compile. If you wanna inspect what is compiled use -XX:PrintCompilation.

Among most common JIT compilation techniques used by HotSpot VM is method inlining, which is practice of substituting the body of a method into the places where the method is called. This technique saves the cost of calling the method. In the HotSpot there is a limit on method size which can be substituted. Next technique commonly used is monomorphic dispatch which relies on a fact that there are paths through method code which belongs to one reference type most of the time and other paths that belong to other type. So the exact method definitions are known without checking thanks to this observation and the overhead of virtual method lookup can be eliminated. JIT compiler can emit optimised machine code which is faster. There is many other optimisation techniques as loop optimisation, dead code elimination, intrinsics and others.

The performance gain by inlining optimisation can be demonstrated on simple Scala code:

class IncWhile {

def main(): Int = {

var i: Int = 0

var limit = 0

while (limit < 1000000000) {

i = inc(i)

limit = limit + 1

}

i

}

def inc(i: Int): Int = i + 1

}

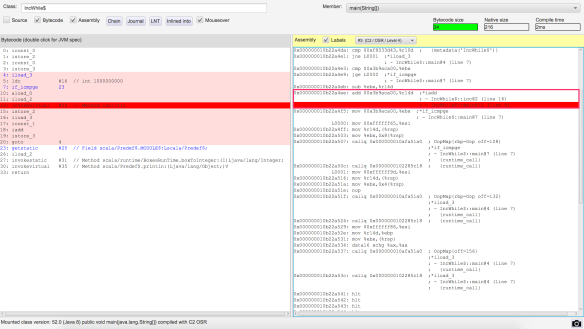

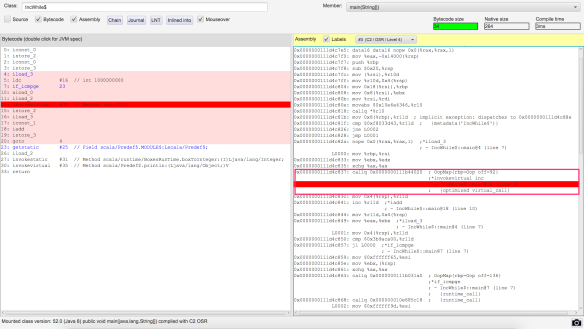

Where method inc is eligible for inlining as the method body is smaller than 35 bytes of JVM bytecode (actual size of inc method is 9 bytes). Inlining optimisation can be verified by looking into JIT optimised machine code.

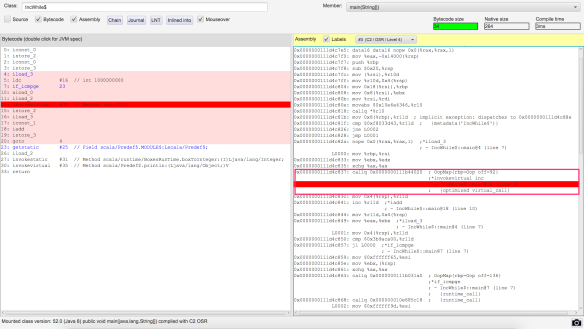

Difference is obvious when compared to machine code when inlining is disabled use –XX:CompileCommand=dontinline,com/jaksky/jvm/tests/jit/IncWhile.inc

Difference in runtime characteristics is also significant as the benchmark results show. With disabled inlining:

[info] Result "com.jaksky.jvm.tests.jit.IncWhile.main":

[info] 2112778741.540 ±(99.9%) 9778298.985 ns/op [Average]

[info] (min, avg, max) = (2040573480.000, 2112778741.540, 2192003946.000), stdev = 28831537.237

[info] CI (99.9%): [2103000442.555, 2122557040.525] (assumes normal distribution)

[info] # Run complete. Total time: 00:08:03

[info] Benchmark Mode Cnt Score Error Units

[info] IncWhile.main avgt 100 2112778741.540 ± 9778298.985 ns/op

When inlining enabled:

[info] Result "com.jaksky.jvm.tests.jit.IncWhile.main":

[info] 2.739 ±(99.9%) 0.029 ns/op [Average]

[info] (min, avg, max) = (2.618, 2.739, 3.167), stdev = 0.084

[info] CI (99.9%): [2.710, 2.767] (assumes normal distribution)

[info] # Run complete. Total time: 00:04:13

[info] Benchmark Mode Cnt Score Error Units

[info] IncWhile.main avgt 100 2.739 ± 0.029 ns/op

so the performance gain by inlining a method body can be quite significant.

In this post we discussed mechanics of Java JIT compilation and some optimisation techniques used. We particularly focused on the one of the simplest optimisation technique called method inlining. We demonstrated performance gain brought by eliminating a method call represented by

invokevirtual bytecode instruction. Scala also offers a special annotation @inline which should help us with performance aspects of the code under the development. All the code for running the experiments is available online on my GitHub account.

Rate this:Share this:

- Share on Facebook (Opens in new window)

Like this:Like Loading...

Related

Difference is obvious when compared to machine code when inlining is disabled use –XX:CompileCommand=dontinline,com/jaksky/jvm/tests/jit/IncWhile.inc

Difference is obvious when compared to machine code when inlining is disabled use –XX:CompileCommand=dontinline,com/jaksky/jvm/tests/jit/IncWhile.inc

Difference in runtime characteristics is also significant as the benchmark results show. With disabled inlining:

[info] Result "com.jaksky.jvm.tests.jit.IncWhile.main":

[info] 2112778741.540 ±(99.9%) 9778298.985 ns/op [Average]

[info] (min, avg, max) = (2040573480.000, 2112778741.540, 2192003946.000), stdev = 28831537.237

[info] CI (99.9%): [2103000442.555, 2122557040.525] (assumes normal distribution)

[info] # Run complete. Total time: 00:08:03

[info] Benchmark Mode Cnt Score Error Units

[info] IncWhile.main avgt 100 2112778741.540 ± 9778298.985 ns/op

Difference in runtime characteristics is also significant as the benchmark results show. With disabled inlining:

[info] Result "com.jaksky.jvm.tests.jit.IncWhile.main":

[info] 2112778741.540 ±(99.9%) 9778298.985 ns/op [Average]

[info] (min, avg, max) = (2040573480.000, 2112778741.540, 2192003946.000), stdev = 28831537.237

[info] CI (99.9%): [2103000442.555, 2122557040.525] (assumes normal distribution)

[info] # Run complete. Total time: 00:08:03

[info] Benchmark Mode Cnt Score Error Units

[info] IncWhile.main avgt 100 2112778741.540 ± 9778298.985 ns/op