Let us consider transformations of the space  . How does Lebesgue measure change by this transformation? And how do integrals change? The general case is answered by Jacobi’s formula for integration by substitution. We will start out slowly and only look at how the measure of sets is transformed by linear mappings.

. How does Lebesgue measure change by this transformation? And how do integrals change? The general case is answered by Jacobi’s formula for integration by substitution. We will start out slowly and only look at how the measure of sets is transformed by linear mappings.

It is folklore in the basic courses on linear algebra, when the determinant of a matrix is introduced, to convey the notion of size of the parallelogram spanned by the column vectors of the matrix. The following theorem shows why this folklore is true; this of course is based on the axiomatic description of the determinant which encodes the notion of size already. But coming from pure axiomatic reasoning, we can connect the axioms of determinant theory to their actual meaning in measure theory.

First, remember the definition of the pushforward measure. Let  and

and  be measurable spaces, and

be measurable spaces, and  a measurable mapping (i.e. it maps Borel-measurable sets to Borel-measurable sets; we shall not deal with finer details of measure theory here). Let

a measurable mapping (i.e. it maps Borel-measurable sets to Borel-measurable sets; we shall not deal with finer details of measure theory here). Let  be a measure on

be a measure on  . Then we define a measure on

. Then we define a measure on  in the natural – what would

in the natural – what would  do? – way:

do? – way:

In what follows,  and

and  the Lebesgue measure.

the Lebesgue measure.

Theorem (Transformation of Measure): Let  be a bijective linear mapping and let

be a bijective linear mapping and let  be a measurable set. Then, the pushforward measure satisfies:

be a measurable set. Then, the pushforward measure satisfies:

We will start with several Lemmas.

Lemma (The Translation-Lemma): Lebesgue measure is invariant under translations.

Proof: Let  with

with  component-wise. Let

component-wise. Let  be the shift by the vector

be the shift by the vector  , i.e.

, i.e.  and

and  . Then,

. Then,

where the interval is meant as the cartesian product of the component-intervals. For the Lebesgue-measure, we get

The measures  ,

,  hence agree on the rectangles (open on their left-hand sides), i.e. on a semi-ring generating the

hence agree on the rectangles (open on their left-hand sides), i.e. on a semi-ring generating the  -algebra

-algebra  . With the usual arguments (which might as well involve

. With the usual arguments (which might as well involve  -stable Dynkin-systems, for instance), we find that the measures agree on the whole

-stable Dynkin-systems, for instance), we find that the measures agree on the whole  .

.

q.e.d.

Lemma (The constant-multiple-Lemma): Let  be a translation-invariant measure on

be a translation-invariant measure on  , and

, and  . Then

. Then  for any

for any  .

.

Note that the Lemma only holds for finite measures on  . For instance, the counting measure is translation-invariant, but it is not a multiple of Lebesgue measure.

. For instance, the counting measure is translation-invariant, but it is not a multiple of Lebesgue measure.

Proof: We divide the set  via a rectangular grid of sidelengths

via a rectangular grid of sidelengths  ,

,  :

:

On the right-hand side there are  sets which have the same measure (by translation-invariance). Hence,

sets which have the same measure (by translation-invariance). Hence,

Here, we distinguish three cases.

Case 1:  . Then,

. Then,

By choosing appropriate grids and further translations, we get that  for any rectangle

for any rectangle  with rational bounds. Via the usual arguments,

with rational bounds. Via the usual arguments,  on the whole of

on the whole of  .

.

Case 2:  and

and  . By assumption, however, this measure is finite. Setting

. By assumption, however, this measure is finite. Setting  , we can look at the measure

, we can look at the measure  , which of course has

, which of course has  . By Case 1,

. By Case 1,  .

.

Case 3:  . Then, using translation invariance again,

. Then, using translation invariance again,

Again, we get  for all

for all  .

.

That means, in all cases,  is equal to a constant multiple of

is equal to a constant multiple of  , the constant being the measure of

, the constant being the measure of  . That is not quite what we intended, as we wish the constant multiple to be the measure of the compact set

. That is not quite what we intended, as we wish the constant multiple to be the measure of the compact set  .

.

Remember our setting  and

and  . Let

. Let  . We distinguish another two cases:

. We distinguish another two cases:

Case

. By monotony,

. By monotony,  and Case 3 applies:

and Case 3 applies:  .

.

Case

. By monotony and translation-invariance,

. By monotony and translation-invariance,

meaning  . Therefore, as

. Therefore, as  , we get

, we get  , and by Case 1,

, and by Case 1,  . In particular,

. In particular,

and so,  , meaning

, meaning  .

.

q.e.d.

Proof (of the Theorem on Transformation of Measure). We will first show that the measure  is invariant under translations.

is invariant under translations.

We find, using the Translation-Lemma in  , and the linearity of

, and the linearity of  before that,

before that,

which means that  is indeed invariant under translations.

is indeed invariant under translations.

As  is compact, so is

is compact, so is  – remember that continuous images of compact sets are compact (here, the continuous mapping is

– remember that continuous images of compact sets are compact (here, the continuous mapping is  ). In particular,

). In particular,  is bounded, and thus has finite Lebesgue measure.

is bounded, and thus has finite Lebesgue measure.

We set  . By the constant-multiple-Lemma,

. By the constant-multiple-Lemma,  is a multiple of Lebesgue measure: we must have

is a multiple of Lebesgue measure: we must have

We only have left to prove that  . To do this, there may be two directions to follow. We first give the way that is laid out in Elstrodt’s book (which we are basically following in this whole post). Later, we shall give the more folklore-way of concluding this proof.

. To do this, there may be two directions to follow. We first give the way that is laid out in Elstrodt’s book (which we are basically following in this whole post). Later, we shall give the more folklore-way of concluding this proof.

We consider more and more general fashions of the invertible linear mapping  .

.

Step 1: Let  be orthogonal. Then, for the unit ball

be orthogonal. Then, for the unit ball  ,

,

This means, that  .

.

This step shows for the first time how the properties of a determinant encode the notion of size already: we have only used the basic lemmas on orthogonal matrices (they leave distances unchanged and hence the ball  doesn’t transform; besides, their inverse is their adjoint) and on determinants (they don’t react to orthogonal matrices because of their multiplicative property and because they don’t care for adjoints).

doesn’t transform; besides, their inverse is their adjoint) and on determinants (they don’t react to orthogonal matrices because of their multiplicative property and because they don’t care for adjoints).

Step 2: Let  have a representation as a diagonal matrix (using the standard basis of

have a representation as a diagonal matrix (using the standard basis of  ). Let us assume w.l.o.g. that

). Let us assume w.l.o.g. that  with

with  . The case of

. The case of  is only notationally cumbersome. We get

is only notationally cumbersome. We get

Again, the basic lemmas on determinants already make use of the notion of size without actually saying so. Here, it is the computation of the determinant by multiplication of the diagonal.

Step 3: Let  be linear and invertible, and let

be linear and invertible, and let  be its adjoint. Then

be its adjoint. Then  is non-negative definite (since for

is non-negative definite (since for  ,

,  ). By the Principal Axis Theorem, there is some orthogonal matrix

). By the Principal Axis Theorem, there is some orthogonal matrix  and some diagonal matrix with non-negative entries

and some diagonal matrix with non-negative entries  with

with  . As

. As  was invertible, no entry of

was invertible, no entry of  may vanish here (since then, its determinant would vanish and in particular,

may vanish here (since then, its determinant would vanish and in particular,  would no longer be invertible). Now, we set

would no longer be invertible). Now, we set

which is orthogonal because of

As  , we see from Step 1

, we see from Step 1

by the multiplicative property of determinants again ( ).

).

q.e.d.(Theorem)

As an encore, we show another way to conclude in the Theorem, once all the Lemmas are shown and applied. This is the more folklore way alluded to in the proof, making use of the fact that any invertible matrix is the product of elementary matrices (and, of course, making use of the multiplicative property of determinants). Hence, we only consider those.

Because Step 2 of the proof already dealt with diagonal matrices, we only have to look at shear-matrices like  . They are the identity matrix with the (off-diagonal) entry

. They are the identity matrix with the (off-diagonal) entry  in row

in row  and column

and column  . One readily finds

. One readily finds  , and

, and  . Any vector

. Any vector  is mapped to

is mapped to

This gives

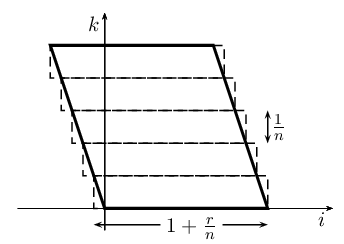

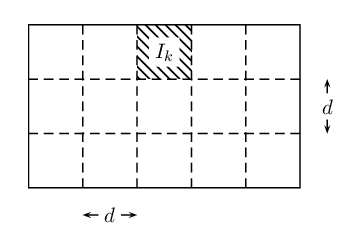

This is a parallelogram that may be covered by  rectangles as follows: we fix the dimension

rectangles as follows: we fix the dimension  and one other dimension to set a rectangle of height

and one other dimension to set a rectangle of height  , width

, width  (all other dimension-widths = 1; see the image for an illustration). Implicitly, we have demanded that

(all other dimension-widths = 1; see the image for an illustration). Implicitly, we have demanded that  here; but

here; but  is uninteresting for the proof, as there are too few invertible linear mappings in

is uninteresting for the proof, as there are too few invertible linear mappings in  .

.

By monotony, this yields

On the other hand, this parallelogram itself covers the rectangles of width  , and a similar computation shows that in the limit

, and a similar computation shows that in the limit  .

.

In particular:  .

.

q.e.d. (Theorem encore)

Proving the multidimensional transformation formula for integration by substitution is considerably more difficult than in one dimension, where it basically amounts to reading the chain rule reversedly. Let us state the formula here first:

Theorem (The Transformation Formula, Jacobi): Let  be open sets and let

be open sets and let  be a

be a  -diffeomorphism (i.e.

-diffeomorphism (i.e.  exists and both

exists and both  and

and  are

are  -functions). Let

-functions). Let  be measurable. Then,

be measurable. Then,  is measurable and

is measurable and

At the core of the proof is the Theorem on Transformation of Measure that we have proved above. The idea is to approximate  by linear mappings, which locally transform the Lebesgue measure underlying the integral and yield the determinant in each point as correction factor. The technical difficulty is to show that this approximation does no harm for the evaluation of the integral.

by linear mappings, which locally transform the Lebesgue measure underlying the integral and yield the determinant in each point as correction factor. The technical difficulty is to show that this approximation does no harm for the evaluation of the integral.

We will need a lemma first, which carries most of the weight of the proof.

The Preparatory Lemma: Let  be open sets and let

be open sets and let  be a

be a  -diffeomorphism. If

-diffeomorphism. If  is a Borel set, then so is

is a Borel set, then so is  , and

, and

Proof: Without loss of generality, we can assume that  ,

,  and

and  are defined on a compact set

are defined on a compact set  . We consider, for instance, the sets

. We consider, for instance, the sets

The  are open and bounded,

are open and bounded,  is hence compact, and there is a chain

is hence compact, and there is a chain  for all

for all  , with

, with  . To each

. To each  there is, hence, a compact superset on which

there is, hence, a compact superset on which  ,

,  and

and  are defined. Now, if we can prove the statement of the Preparatory Lemma on

are defined. Now, if we can prove the statement of the Preparatory Lemma on  , it will also be true on

, it will also be true on  by the monotone convergence theorem.

by the monotone convergence theorem.

As we can consider all relevant functions to be defined on compact sets, and as they are continuous (and even more) by assumption, they are readily found to be uniformly continuous and bounded.

It is obvious that  will be a Borel set, as

will be a Borel set, as  is continuous.

is continuous.

Let us prove that the Preparatory Lemma holds for rectangles  with rational endpoints, being contained in

with rational endpoints, being contained in  .

.

There is some  such that for any

such that for any  ,

,  . By continuity, there is a finite constant

. By continuity, there is a finite constant  with

with

and by uniform continuity,  may be chosen small enough such that, for any

may be chosen small enough such that, for any  , even

, even

With this  , we may now sub-divide our rectangle

, we may now sub-divide our rectangle  into disjoint cubes

into disjoint cubes  of side-length

of side-length  such that

such that  . In what follows, we shall sometimes need to consider the closure

. In what follows, we shall sometimes need to consider the closure  for some of the estimates, but we shall not make the proper distinction for reasons of legibility.

for some of the estimates, but we shall not make the proper distinction for reasons of legibility.

For any given  , every other point

, every other point  of

of  may at most have distance

may at most have distance  in each of its components, which ensures

in each of its components, which ensures

This, in turn, means,  (and

(and  has been clear because of the construction of

has been clear because of the construction of  ).

).

Now, in every of the cubes  , we choose the point

, we choose the point  with

with

and we define the linear mapping

.

.

Remember that for convex sets  , differentiable mappings

, differentiable mappings  , and points

, and points  , the mean value theorem shows

, the mean value theorem shows

Let  be a given point in one of the cubes. We apply the mean value theorem to the mapping

be a given point in one of the cubes. We apply the mean value theorem to the mapping  , which is certainly differentiable, to

, which is certainly differentiable, to  , and to the convex set

, and to the convex set  :

:

Note that as  ,

,  by convexity, and hence the upper estimate of uniform continuity is applicable. Note beyond that, that

by convexity, and hence the upper estimate of uniform continuity is applicable. Note beyond that, that  is the linear mapping

is the linear mapping  and the derivative of a linear mapping is the linear mapping itself.

and the derivative of a linear mapping is the linear mapping itself.

Now,  , as both points are contained in

, as both points are contained in  , and hence

, and hence  shows

shows

By continuity (and hence boundedness) of  , we also have

, we also have

,

which means .

Hence:

Why all this work? We want to bound the measure of the set  , and we can get it now: the shift

, and we can get it now: the shift  is unimportant by translation invariance. And the set

is unimportant by translation invariance. And the set  is contained in a cube of side-length

is contained in a cube of side-length  . As promised, we have approximated the mapping

. As promised, we have approximated the mapping  by a linear mapping

by a linear mapping  on a small set, and the transformed set has become only slightly bigger. By the Theorem on Transformation of Measure, this shows

on a small set, and the transformed set has become only slightly bigger. By the Theorem on Transformation of Measure, this shows

Summing over all the cubes  of which the rectangle

of which the rectangle  was comprised, (remember that

was comprised, (remember that  is a diffeomorphism and disjoint sets are kept disjoint; besides,

is a diffeomorphism and disjoint sets are kept disjoint; besides,  has been chosen to be the point in

has been chosen to be the point in  of smallest determinant for

of smallest determinant for  )

)

Taking  yields to smaller subdivisions

yields to smaller subdivisions  and in the limit to the conclusion. The Preparatory Lemma holds for rectangles.

and in the limit to the conclusion. The Preparatory Lemma holds for rectangles.

Now, let  be any Borel set, and let

be any Borel set, and let  . We cover

. We cover  by disjoint (rational) rectangles

by disjoint (rational) rectangles  , such that

, such that  . Then,

. Then,

If we let  , we see

, we see .

q.e.d. (The Preparatory Lemma)

We didn’t use the full generality that may be possible here: we already focused ourselves on the Borel sets, instead of the larger class of Lebesgue-measurable sets. We shall skip the technical details that are linked to this topic, and switch immediately to the

Proof of Jacobi’s Transformation Formula: We can focus on non-negative functions  without loss of generality (take the positive and the negative part separately, if needed). By the Preparatory Lemma, we already have

without loss of generality (take the positive and the negative part separately, if needed). By the Preparatory Lemma, we already have

which proves the inequality

for indicator functions . By usual arguments (linearity of the integral, monotone convergence), this also holds for any measurable function

. To prove the Transformation Formula completely, we apply this inequality to the transformation

. To prove the Transformation Formula completely, we apply this inequality to the transformation  and the function

and the function :

since the chain rule yields . This means that the reverse inequality also holds. The Theorem is proved.

q.e.d. (Theorem)

There may be other, yet more intricate proofs of this Theorem. We shall not give any other of them here, but the rather mysterious looking way in which the determinant pops up in the transformation formula is not the only way to look at it. There is a proof by induction, given in Heuser’s book, where the determinant just appears from the inductive step. However, there is little geometric intuition in this proof, and it is by no means easier than what we did above (as it make heavy use of the theorem on implicit functions). Similar things may be said about the rather functional analytic proof in Königsberger’s book (who concludes the transformation formula by step functions converging in the -norm, he found the determinant pretty much in the same way that we did).

Let us harvest a little of the hard work we did on the Transformation Formula. The most common example is the integral of the standard normal distribution, which amounts to the evaluation of

This can happen via the transformation to polar coordinates:

For this transformation, which is surjective on all of except for a set of measure

, we find

From the Transformation Formula we now get

In particular, . One of the very basic results in probability theory.

Another little gem that follows from the Transformation Formula are the Fresnel integrals

They follow from the same basic trick given above for the standard normal density, but as other methods for deriving this result involve even trickier uses of similarly hard techniques (the Residue Theorem, for instance, as given in Remmert’s book), we shall give the proof of this here:

Consider

Then, the trigonometric identity tells us

This integral can be evaluated by parts to show

which means

Then we consider the product and use the identity

, as well as the symmetry of the integrand and integration by parts, to get

We thus find by the dominated convergence theorem

and

One can easily find the bound that both integrals must be positive and from the first computation, we get

from the second computation follows that the integrals have value .

q.e.d. (Fresnel integrals)

Even Brouwer’s Fixed Point Theorem may be concluded from the Transformation Formula (amongst a bunch of other theorems, none of which is actually as deep as this one though). This is worthy of a seperate text, mind you.

Advertisements Share this: